MicroK8s OIDC

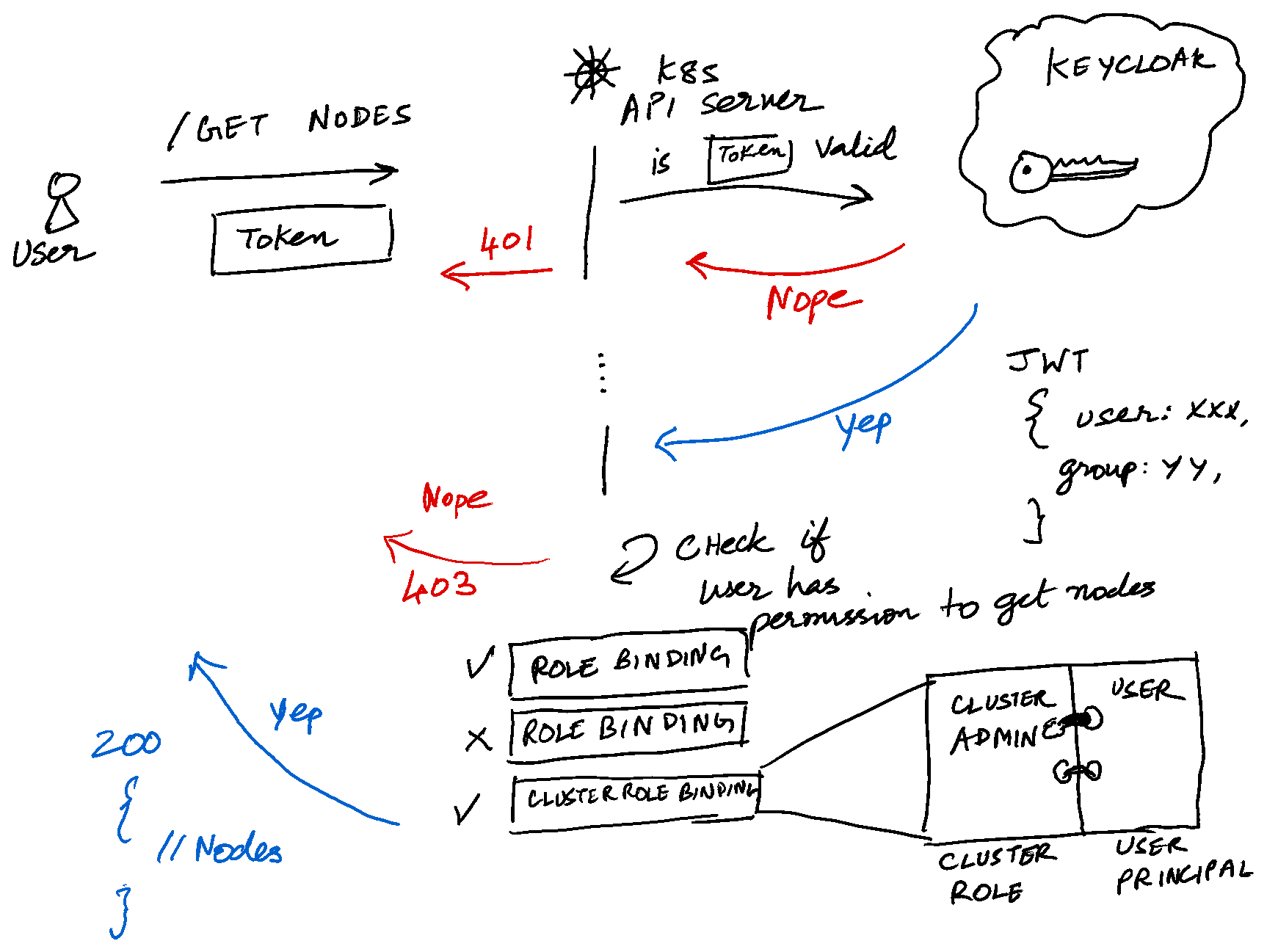

Configuring Microk8s to work with an OIDC provider is straightforward. We add OIDC provider specific parameter to the Kube API server and restart it.

In this case, we’re going to use Keycloak as the IAM provider. That said, this procedure should work with other OIDC providers the same way.

As a first step, ssh into the VM running the master node and edit the file /var/snap/microk8s/current/args/kube-apiserver and add the following lines:

--oidc-issuer-url=https://usw2.auth.ac/auth/realms/<realm-name>

--oidc-client-id=<your-client-id>

I just want to take a moment to mention that the folks at Phase Two provide hosted Keycloak and have a generous free tier.

Then, restart your Kube API server.

sudo snap restart microk8s

That’s it. Now, your Kubernetes cluster should accept OIDC tokens and authorize users. Let’s test if this works as expected. For that, we first need an ID token. We can use the Python Keycloak library to generate a token for us.

keycloak_openid = KeycloakOpenID(server_url="https://usw2.auth.ac/auth/",

client_id="your-client-id",

realm_name="your-realm",

client_secret_key="Sup3RSecR3+")

token = keycloak_openid.token("arun", "arun-password")

We’ll now use the id_token from the token dict and use it to fetch nodes in our Kubernetes cluster.

$ kubectl --token=eyJhbGciOi...blah blah ...DZP3OGg get nodes

Error from server (Forbidden): nodes is forbidden: User "https://usw2.auth.ac/auth/realms/your-realms#d1a4d8ed-d105-415b-a923-bff729d228da" cannot list resource "nodes" in API group "" at the cluster scope

Rightly so, because the user arun doesn’t have enough privileges to get all the nodes in the cluster. We have to create a cluster role binding to map the clusteradmin role to the user principal of arun.

We can get the user principal of arun by decoding the JWT token.

{

"exp": 1689612831,

"iat": 1689612531,

"auth_time": 0,

"jti": "939fe380-91fb-4d21-a702-77401ea47f84",

"iss": "https://usw2.auth.ac/auth/realms/your-realm",

"aud": "account",

"sub": "d1a4d8ed-d105-415b-a923-bff729d228da",

"typ": "ID",

"azp": "account",

"session_state": "b72df618-6a92-40d9-9c2b-a372a7f9e15e",

"at_hash": "b7x6R6tQ_XQ78e44HTS6_g",

"acr": "1",

"sid": "b72df618-6a92-40d9-9c2b-a372a7f9e15e",

"email_verified": true,

"groups": [],

"preferred_username": "arun",

"email": "arun@snailmail.com"

}

Kubernetes looks up the sub attribute by default from the token, which is d1a4d8ed-d105-415b-a923-bff729d228da in our case. This behaviour can be overridden by changing the --oidc-username-claim parameter. Let’s make arun a cluster admin.

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: cluster-admin-binding

subjects:

- kind: User

name: d1a4d8ed-d105-415b-a923-bff729d228da

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

$ kubectl apply -f arun-cluster-admin-crb.yaml

clusterrolebinding.rbac.authorization.k8s.io/cluster-admin-binding created

Now, generate a new token using the above steps and check if arun can fetch the nodes.

$ kubectl --token=eyJhbGciOi...blah blah ...ZTt9S-Bg get nodes

NAME STATUS ROLES AGE VERSION

group-03-0 Ready <none> 21h v1.27.2

group-02-1 Ready <none> 21h v1.27.2

group-01-0 Ready <none> 2d3h v1.27.2

group-02-0 Ready <none> 21h v1.27.2

This is all fine and dandy if we can configure kube API server parameters to accept OIDC tokens. But what if we can’t? like the case of most managed Kubernetes providers. We shall look at how to enable this functionality even for managed Kubernetes providers in the next post.