OpenEBS Jiva Quickstart

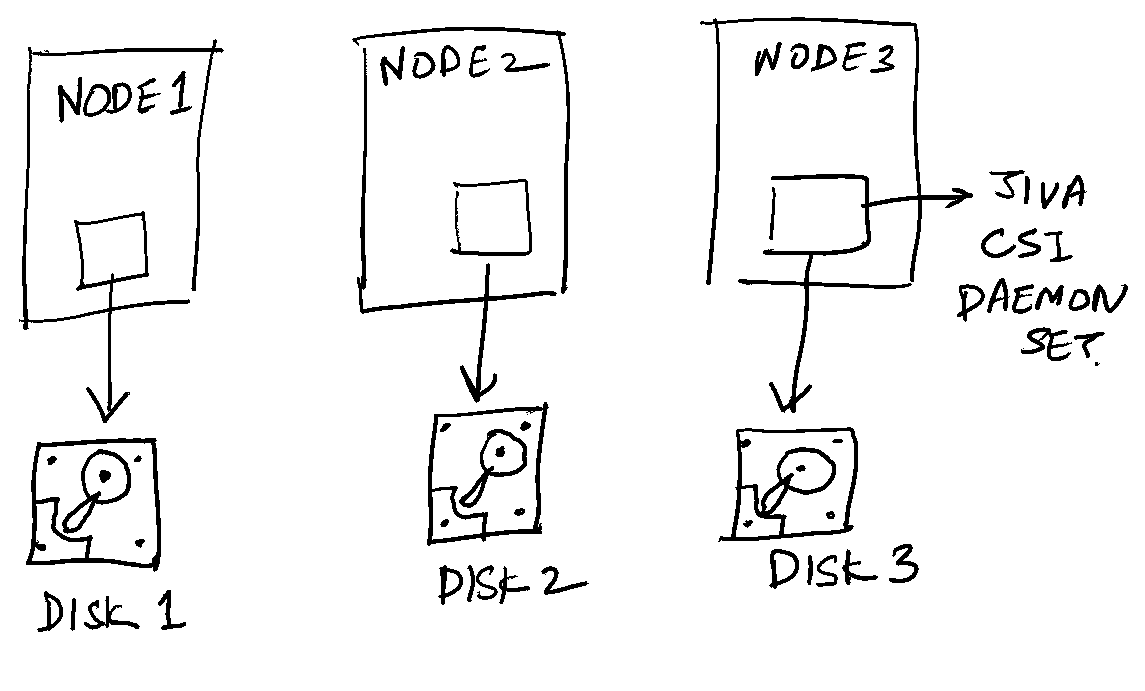

Now that we have installed OpenEBS, let’s setup a few storage classes on top of it. We shall look at Jiva. It is a highly available block volume option which stores data in the node’s local storage.

What makes it “highly available” is the fact that the Jiva operator stores multiple copies of the volume in different nodes. This allows for data resiliency at the event of a node failure.

Let’s try and configure a Jiva volume in our single node cluster. Our first step is to install OpenEBS.

helm install openebs --namespace openebs openebs/openebs --create-namespace

This will install 2 flavours of local storage volumes.

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

openebs-device openebs.io/local Delete WaitForFirstConsumer false 120m

openebs-hostpath openebs.io/local Delete WaitForFirstConsumer false 120m

These would suffice for a 1-node cluster. We are setting up Jiva to illustrate data redundancy. Let’s upgrade the helm installation to install Jiva.

helm upgrade openebs openebs/openebs --namespace openebs --reuse-values --set jiva.enabled=true

NOTE that this helm installation might fail as Jiva requires a few CRs to be installed. Helm doesn’t handle CRs in the chart update phase smoothly. The best course of action is to install the CRs separately and re-run the above command. Once Jiva is installed successfully, you should be able to see a new Jiva storage class.

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

openebs-device openebs.io/local Delete WaitForFirstConsumer false 120m

openebs-hostpath openebs.io/local Delete WaitForFirstConsumer false 120m

openebs-jiva-csi-default jiva.csi.openebs.io Delete Immediate true 119m

Next, let’s create a new PVC with the Jiva storage class.

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: example-jiva-csi-pvc

spec:

storageClassName: openebs-jiva-csi-default

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

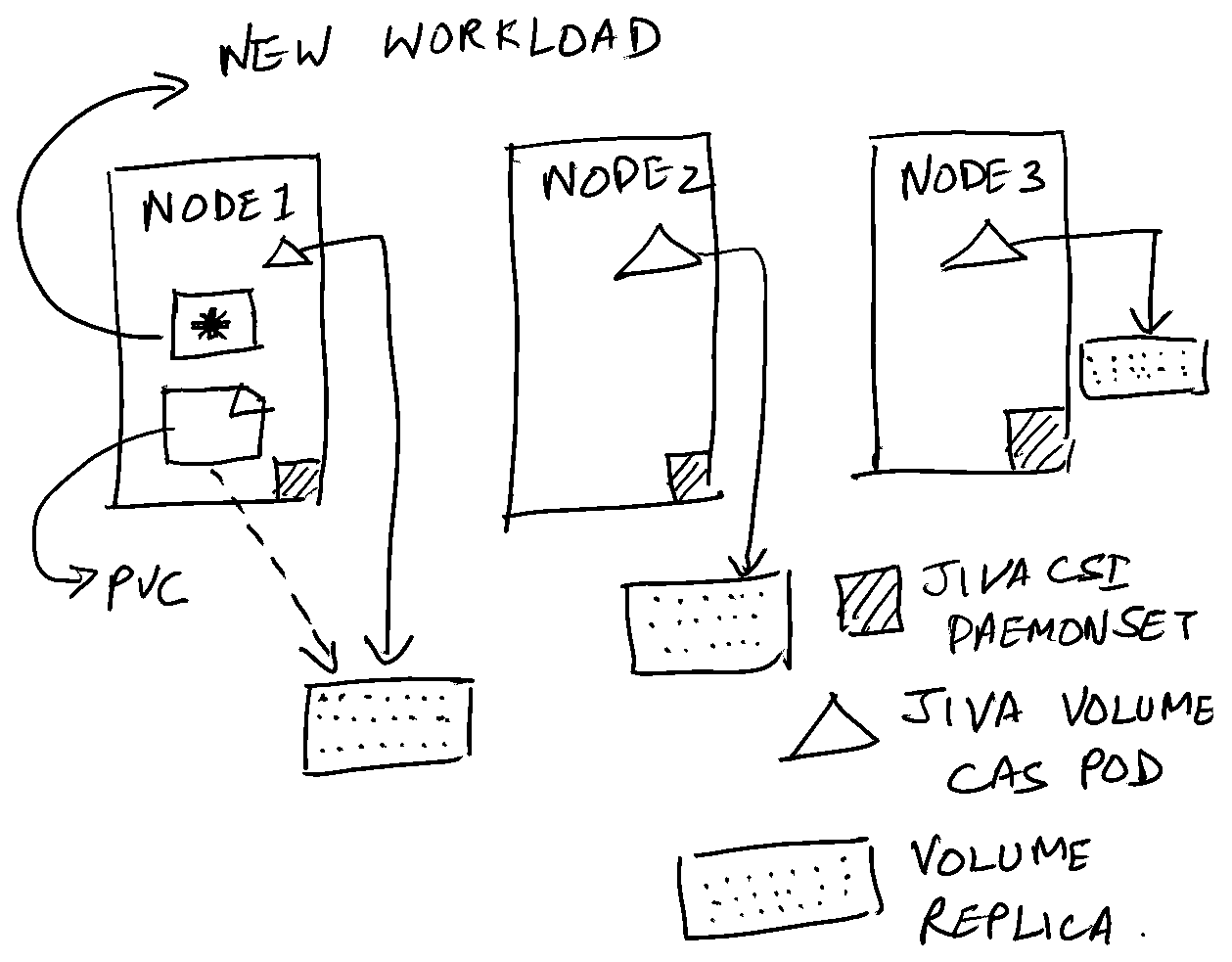

This will create the following resources:

A CAS controller, one for each replica, for the PVC we created.

$ k get pod -n openebs

NAME READY STATUS RESTARTS AGE

openebs-localpv-provisioner-65cdb6895-vscrn 1/1 Running 0 58m

openebs-ndm-operator-74dcc95548-9lxxw 1/1 Running 0 58m

openebs-ndm-ms2x6 1/1 Running 0 58m

openebs-jiva-operator-5cc8d86fb8-nj67c 1/1 Running 0 56m

openebs-jiva-csi-node-bv7qq 3/3 Running 0 56m

openebs-jiva-csi-controller-0 5/5 Running 0 56m

pvc-92c8c28f-d46c-49ce-9876-1006b8bdb0f1-jiva-rep-1 0/1 Pending 0 33m

pvc-92c8c28f-d46c-49ce-9876-1006b8bdb0f1-jiva-rep-2 0/1 Pending 0 33m

pvc-92c8c28f-d46c-49ce-9876-1006b8bdb0f1-jiva-rep-0 1/1 Running 0 33m

pvc-92c8c28f-d46c-49ce-9876-1006b8bdb0f1-jiva-ctrl-8477c8fnhj5h 2/2 Running 0 33m

And a JivaVolume CR which encapsulates the PVC and the controllers.

$ kubectl get jivavolume -n openebs

NAME REPLICACOUNT PHASE STATUS

pvc-92c8c28f-d46c-49ce-9876-1006b8bdb0f1 Syncing RO

We can see that the JivaVolume CR is in a “Syncing” state and 2 of the 3 CAS pods are in “Pending” state. This is because the pods have a policy to boot in different nodes(remember? Jiva is meant for redundant block storage). The only CAS pod which came up was the one running in this one-node cluster. If we create a workload using this PVC, it will be in a perpetual limbo state.

apiVersion: apps/v1

kind: Deployment

metadata:

name: busybox

labels:

app: busybox

spec:

replicas: 1

strategy:

type: RollingUpdate

selector:

matchLabels:

app: busybox

template:

metadata:

labels:

app: busybox

spec:

containers:

- resources:

limits:

cpu: 0.5

name: busybox

image: busybox

command: ['sh', '-c', 'echo Container 1 is Running ; sleep 3600']

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3306

name: busybox

volumeMounts:

- mountPath: /var/lib/mysql

name: demo-vol1

volumes:

- name: demo-vol1

persistentVolumeClaim:

claimName: example-jiva-csi-pvc

This is because the PVC isn’t technically ready yet as 2 of the 3 replicas aren’t available.

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

busybox-7d68d87bd-nsnxl 0/1 ContainerCreating 0 14m

You can also infer the same from the CAS controller logs.

$ kubectl logs -f pvc-92c8c28f-d46c-49ce-9876-1006b8bdb0f1-jiva-ctrl-8477c8fnhj5h -n openebs

.

.

.

time="2022-10-31T06:47:34Z" level=info msg="Register Replica, Address: 10.1.249.16 UUID: ef7a2164069883d78c8e834015f3b1d9f583fc7d Uptime: 29m0.045636047s State: closed Type: Backend RevisionCount: 1"

time="2022-10-31T06:47:34Z" level=warning msg="No of yet to be registered replicas are less than 3 , No of registered replicas: 1"

Now, we have 2 options to continue using Jiva.

Create a single replica Jiva SC #

Create a single replica Jiva SC. This is really not worth it, as you’re better off using one of the local storage options. Here’s now a single replica SC will look like:

allowVolumeExpansion: true

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: openebs-jiva-csi-single-replica

parameters:

cas-type: jiva

policy: openebs-jiva-single-replica-policy

provisioner: jiva.csi.openebs.io

reclaimPolicy: Delete

volumeBindingMode: Immediate

Notice that it uses a spec called parameters.policy. This is another CR called JivaVolumePolicy.

apiVersion: openebs.io/v1

kind: JivaVolumePolicy

metadata:

name: openebs-jiva-single-replica-policy

namespace: openebs

spec:

replicaSC: openebs-hostpath

target:

replicationFactor: 1

If you observe the spec for the above volume policy, you’ll notice that it uses openebs-hostpath underneath.

Bump up the number of nodes #

Another smarter option is to increase the number of nodes. This will provision the “Pending” CAS pods in the newer nodes and your workload will be up and running.

$ k get pod

NAME READY STATUS RESTARTS AGE

busybox-7d68d87bd-nsnxl 1/1 Running 1 (32m ago) 147m

The JivaVolume CR shows the volume replica count and status.

$ kubectl get jivavolume -n openebs

NAME REPLICACOUNT PHASE STATUS

pvc-92c8c28f-d46c-49ce-9876-1006b8bdb0f1 3 Ready RW

We now have Local storage, Jiva and a 3-node Kubernetes cluster. This is a good moment to introduce cStor.